🚀 Portable does more than just ELT. Explore Our AI Orchestration Capabilities

100+ Best ETL Tools: Features, Pricing & Benefits (September 2025)

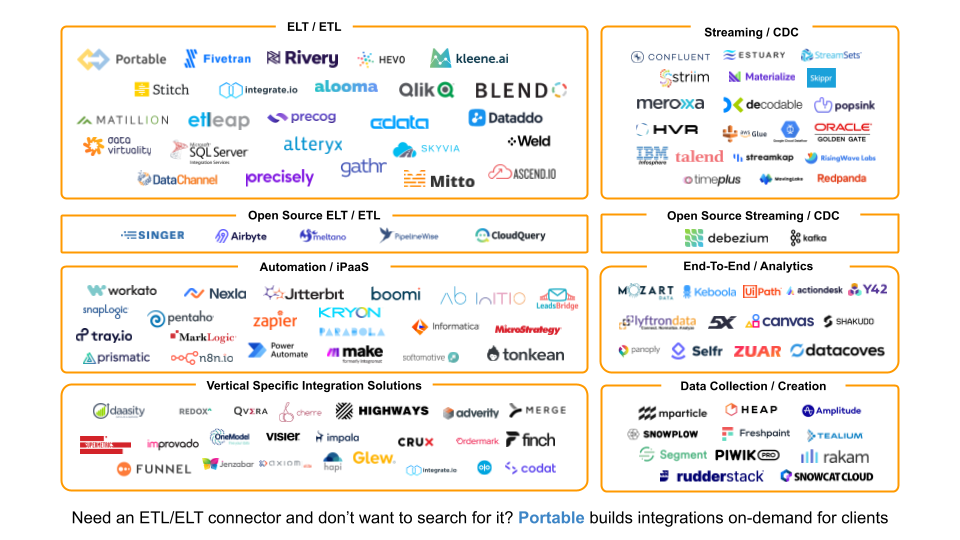

What are the main types of ETL tools?

ETL tools can be classified into four main groups:

- On-premises ETL

- Open-source ETL

- Cloud-based ETL

- Hybrid ETL

Cloud-based ETL

Cloud based ETL solutions are hosted and run on the provider's servers and cloud infrastructure. The organization pays a subscription fee to use the tool, and the supplier is in charge of maintaining and updating it. Cloud ETL tools create value by powering insights or automation. For instance, a company may be doing a deep-dive into their recruiting pipeline, and leverage cloud-based ETL pipelines and a service provider like HR Analytics to build out automated reporting.

Portable, Fivetran, Stitch, Matillion, and Google Cloud Dataflow are a few notable cloud-based ETL solutions.

On-premises ETL

On-premises ETL tools run on a company's infrastructure. They are typically owned and managed by the organization, which has complete control over the tool and the data it analyzes.

Recommended Read: Modern Data Stack: Use Cases & Components

Open Source ETL

Open-source ETL solutions are popular options, given the rise of the open-source movement. Many ETL solutions are now free and provide graphical user interfaces for developing data-sharing processes and monitoring information flow.

Some of the most popular open-source ETL tools are Portable, Apache NiFi, AWS Glue, Airbyte and Informatica.

Hybrid ETL

Depending on the organization's needs and choices, these tools can be run on either the organization's infrastructure or the provider's cloud infrastructure. They blend the scalability and convenience of cloud-based tools with the flexibility of on-premises tools.

Recommended Read: ETL Explained - The Complete Guide

Key considerations while evaluating ETL tools

- Data management

- Data transformation

- Data ingestion

- Data quality

- Data infrastructure

- Data scalability

- QA management

- Cost

100+ Best ETL Tools & Solutions: List for 2023

Here is a list of the top ETL tools:

- Portable

- Integrate.io

- Upsolver

- Apache Hive

- Blendo

- Stitch

- AWS Glue

- Apache NiFi

- IOblend

- Fivetran

- Dataddo

- Domo

- Jaspersoft ETL/Talend Open Studio

- CloverDX

- Informatica PowerCenter

- Apache Airflow

- Qlik Compose

- IBM Infosphere Datastage

- SAP BusinessObjects Data Services

- Hevo Data

- Enlighten

- Azure Data Factory

- ETLWorks

- Microsoft SQL Server Integration Services (SSIS)

- AWS Data Pipeline

- Skyvia

- Toolsverse

- IRI Voracity

- Dextrus

- Astera Centerprise

- Improvado

- Onehouse

- Sybase ETL

- Cognos Data Manager

- Matillion

- Oracle Warehouse Builder

- SAP -- BusinessObjects Data Integrator

- Oracle Data Integrator

- Ab Initio

- IBM -- Infosphere Information Server

- Logstash

- Singer

- DBConvert Studio

- Workato

- Keboola

- Flowgear

- StarfishETL

- Matillion ETL

- CData Sync

- Mule Runtime Engine

- Striim

- Talend Data Fabric

- StreamSets

- Confluent Platform

- Alooma

- Adverity Datatap

- Syncsort

- Adeptia ETL Suite

- Apatar ETL

- SnapLogic Enterprise

- OpenText Integration Center

- Redpoint Data Management

- Sagent Data Flow

- Apache Kafka

- Apache Oozie

- Apache Falcon

- GETL

- Anatella

- EplSite ETL

- Scriptella ETL

- Apache Crunch

- Airbyte

- Meltano

- Visier

- Funnel.io

- Daasity

- Alteryx

- Kleene.ai

- Data Virtuality

- Precog

- Rivery

- Etleap

- Precisely Connect

- Gathr

- Boomi

- Ataccama

- Prospecta

- Xtract.io

- Materialize

- Xplenty

- DBSoftlab

- Flatfile

- Popsink

- Meroxa

- SAS Data Integration Studio

- Bubbles

- Everconnect

- Mitto ETL+

- Optimus Mine

- Polytomic

- Shipyard

- Google Cloud Data Fusion

- Pentaho Kettle

1. Portable

Portable is an ETL platform that offers ETL connectors for over 1000+ data sources.

What's so great about Portable?

-

Portable is one of the best data integration tools for teams dealing with long-tail data sources.

-

Portable offers the ETL connectors you won't find on Fivetran - at a fraction of the cost.

-

The Portable team will design and implement bespoke connectors upon request, with turnaround times as short as a few hours.

Top Features

-

Custom data source connectors are created on demand at no extra charge, and maintenance is provided.

-

Hands-on assistance is available 24 hours a day, seven days a week.

-

A massive catalog of data connectors that are ready to use right away.

-

Data workflows into the major Data Warehouses.

Pricing

Portable offers a free plan with no limits on volume, connectors, or destinations for manual data processing. Portable charges a monthly flat payment of $200 for automatic data transfers. For corporate requirements and SLAs, please contact sales.

Pros

-

Over 1000+ ETL connectors are developed for specialized applications.

-

Portable's data connectors easily transportable between contexts, allowing you to use them on other devices or platforms as needed.

-

New data source connectors were produced without charge within days or hours.

-

Connector maintenance is free of charge.

Cons

-

Only available in the United States.

-

Portable does not support enterprise solutions like Oracle; it only provides long-tail data sources.

-

There is no help with data lakes.

Who is Portable best suited for?

Portable is ideal for teams seeking long-tail ETL connectors not supported by Fivetran.

Source: https://portable.io/

2. Integrate.io

Integrate.io is a low-code data pipeline platform specializing in Operational ETL so companies can automate business processes and manual data preparation to scale. Its three core use cases focus around: file data preparation and B2B data sharing, preparing and loading data to CRMs and ERPs such as Salesforce, NetSuite, & HubSpot, and powering data products with real-time database replication.

Top Features

-

Simple Data Transformations

-

Simple Workflow Design for Defining Task Dependencies

-

Data Security and Compliance

-

Diverse Data Source and Destination Options

-

Excellent Customer Service

Pricing

-

The entry-level ETL and Reverse ETL plans start at $15,000 per year.

-

Professional ETL & Reverse ETL plans begin at $25,000 per year.

-

For Enterprise Edition, please contact the sales team.

Pros

-

Integrate.io offers a simple, drag-and-drop interface that allows even non-technical people to create and manage integrations.

-

Integrate.io offers several pre-built connectors to major business applications, which can save a significant amount of time and effort when merging disparate systems.

-

Integrate.io is designed to handle massive amounts of data and can readily scale to meet an organization's demands.

Cons

-

Because Integrate.io is focused on making integrations easy to establish and manage, it may not offer as many advanced features as more robust enterprise-grade integration platforms.

-

Errors with sophisticated Xplenty flows are difficult to debug.

-

Error logs are not always useful.

Who is Integrate.io best suited for?

Integrate.io is best suited for small to medium-sized firms or departments of bigger enterprises that need to connect and automate their business systems and data quickly. It is especially ideal for firms with minimal IT resources or technical experience who need to swiftly integrate multiple platforms.

3. Upsolver

Upsolver is the easiest and most scalable data integration tool for teams dealing with high-volume, complex data continuously being loaded from streaming, files and operational database sources into Snowflake and Apache Iceberg-based Lakehouses. With Upsolver, you enforce data quality at the source minimizing the time and effort required to chase down and fix issues.

Top Features

-

Easy to use, no-code onboarding

-

Flexible developer experience using SQL, Python SDK and dbtCore integration

-

Hardened, production grade streaming, object store and database (CDC) connectors

-

Built-in data quality validation and alerting - detect and stop issues early

-

Built-in data observability - tool consolidation and integration

Pricing

Upsolver offers three editions that can be used on their managed cloud (SaaS) or as a fully managed solution deployed into your AWS VPC.

Each edition includes a base software fee, billed per month, that includes a set of features and support. In addition, users are charged for the amount of data they ingest (compressed).

- Startup edition: $1,999/mo software license

- Standard edition: $4,999/mo software license

- Enterprise: Call for pricing

Data volume pricing can be found on their website

Pricing example: Startup edition, ingesting from Kafka to Snowflake at a rate of 10TB per month, the cost would be estimated at: $1,999 + $150 * 10TB = $3,499/mo.

Pros

-

Simple to use and requires almost no maintenance

-

Generates code that can be easily versioned, tested and automated

-

Guarantees exactly-once, ordered and deduped data

-

Built-in data quality and observability

-

APIs, Python SDK, and dbt Core integration

-

Supports writing to data warehouse, Lakehouse and Data Lake

-

Flexible architecture supporting ELT and ETL (with SQL transformations)

Cons

-

No SaaS source connectors

-

Only deploys on AWS

-

Startup edition includes additional charge for CDC connectors

Who is Upsolver best suited for?

Upsolver is ideal for organizations looking to put high-volume, production grade data integration in the hands of data producers. With Upsolver, they eliminate the need to manage manual tasks (scheduling, orchestration, dedupe, schema evolution) and easily comply with DataOps best practices (versioning, CICD, testing).

Source: https://www.upsolver.com/

4. Apache Hive

The Apache Hive data warehouse and SQL-like query language are used by the Hadoop distributed file system (HDFS) and other big data systems. It provides an easy user interface for managing and performing queries on massive datasets stored in Hadoop and other big data systems such as Apache Spark and Apache Impala.

One of Hive's key features is its ability to turn SQL-like queries into MapReduce tasks that can be run on a Hadoop cluster.

Top Features

-

SQL-like query language and HiveQL

-

Data Partitioning and Table Bucketing

-

UDF (User-Defined Functions)

-

Support for Different File Formats

-

Performance Optimization

-

Integration with other Hadoop components

-

Multi-Language and Multi-user support

Pricing

The Apache Software Foundation has not yet disclosed pricing information.

Pros

-

Because HQL, like SQL, is a declarative language, it lacks procedural functionality.

-

Hive is a dependable batch-processing framework that may be used as a data warehouse on top of the Hadoop Distributed File System.

-

Hive is capable of handling Petabyte-sized datasets, which are enormous.

-

With HQL, we could reduce the 100 lines of Java code required to query the contents of a structure to four.

Cons

-

Apache Hive only supports OLAP; online transaction processing is not supported (OLTP).

-

Because it takes time to produce a result, Hive is not used for real-time data querying.

-

Subqueries are not permitted.

-

The latency of the apache hive query is really high.

Who is Apache Hive best suited for?

Apache Hive is a query language for data warehousing and data analysis that may be used for a variety of data processing and analytical tasks. Hive is a powerful tool for processing and analyzing massive amounts of data stored in Hadoop.

5. Blendo

Blendo Rudderstack contains Blendo, a cloud data platform for no-code ELT. It speeds up the setup process with automation scripts, allowing you to begin importing Redshift data straight immediately.

Top Features

-

Data Filtering and Data Extraction

-

API Integration

-

Match & Merge

-

Master Data Management

-

Data Integration and Data Analysis

Pricing

-

Only three sources are available for free.

-

The Pro package costs $750 per month and includes modifications.

-

Pricing for enterprise plans can be customized.

Pros

-

45+ data sources were supported.

-

The platform is simple to use and does not necessitate any programming knowledge.

-

Monitoring and warnings are standard features.

Cons

-

There are only a few data sources that are supported.

-

Data transformations have a limited range of capabilities.

-

Additional data sources cannot be connected to Blendo by teams on their own.

Who is Blendo best suited for?

Blendo is best suited for Data teams looking for a no-code platform with a small number of data sources.

6. Stitch

Stitch, a data pipeline tool, is included with Talend. It controls data extraction and simple manipulations using a built-in GUI, Python, Java, or SQL. Extra services include Talend Data Quality and Talend Profiling.

Top Features

-

Replication Frequency

-

Warehouse views

-

Highly Scalable

-

Designed for High Availability

-

Continuous Auditing and Email alerts

-

Transform Nested JSON

Pricing

-

Available 14-day risk-free trial

-

The standard plan, starting at $100 per month, with up to 5 million active rows per month, one destination, and ten sources (limited to "Standard" sources)

-

At $1,250 per month, you can have an advanced package with up to 100 million rows and three locations.

-

At $2,500 a month, you can get a premium service with up to 1 billion rows and five locations.

Pros

-

Automations such as alarms and monitoring are advantageous.

-

More than 130 data sources were supported.

-

Connect to your data source ecosystem.

-

Based on open-source software

-

Simple, powerful ETL built for developers

Cons

-

No on-premise deployment option.

-

Every Stitch plan includes source and destination restrictions.

Who is Stitch best suited for?

Stitch is best suited for: Teams who use common data sources and need a simple tool for basic Redshift data import.

Source: https://www.stitchdata.com/

7. AWS Glue

Amazon Web Services (AWS) Glue, a fully managed extract, transform, and load (ETL) solution, makes it simple to transport data between data storage. It provides a simple and customizable mechanism for organizing ETL processes, and it can automatically discover and classify data to make it easy to search for and query.

The Glue Data Catalog, AWS Glue's single metadata repository, is used to store and track data location, schema, and runtime metrics.

Top Features

-

Integrated Data catalog

-

Serverless and High Scalability

-

Job authoring

-

Integration with other AWS services

-

Data integration with popular data stores and open-source formats

-

Automated code generation

-

Monitoring and troubleshooting

Pricing

Because AWS Glue is a pay-as-you-go service, users only pay for the resources they use. There are no startup costs or minimum charges while using AWS Glue. $0.44 per digital processing hour

Pros

-

Because AWS Glue is a completely managed service, users do not need to worry about configuring, maintaining, or updating the underlying infrastructure.

-

The user-friendly interface of AWS Glue allows users to easily build and manage data integration jobs.

-

Because AWS Glue is a pay-as-you-go service, users only pay for the resources they use.

-

Output formats supported include JSON, CSV, Excel, Parquet, ORC, Avro, and Grok.

Cons

-

To use AWS Glue effectively, customers must have an AWS account and be familiar with these other services.

-

Support for some data sources is limited: AWS Glue provides support for a variety of data sources, however not all data sources receive the same level of support.

-

Spark has difficulty handling joins with high cardinality.

Who is AWS Glue best suited for?

The greatest prospects are organizations that want to find, prepare, move, and combine data from several sources for analytics, machine learning (ML), and application development.

Related: Top Amazon Redshift ETL Tools & Data Connectors

8. Apache NiFi

The Apache Software Foundation developed the web-based open-source data integration platform known as Apache NiFi, which stands for "Data Flow." The automated data flow between systems simplifies the movement and transformation of data from many sources to various targets.

NiFi includes built-in processors for common tasks including filtering, aggregation, and enrichment.

Top Features

-

Low latency and High throughput

-

Dynamic prioritization

-

Flow can be modified at runtime

-

Data Flow Automation

-

Extensibility and Customization

-

Scalability and high Data Security

-

Integration with Other Tools

-

Monitoring and Alerting

-

Easy to Use and Open-source

Pricing

Apache NiFi's pricing information may vary depending on the configuration prices you require. It is available for purchase on the AWS Marketplace. The Professional edition costs $0.25 per hour if purchased with an AWS account.

Pros

-

NiFi was designed to recover from faults without losing data.

-

NiFi includes built-in security mechanisms such as encryption, authentication, and authorization to protect data in transit and at rest.

-

NiFi has processors for common tasks such as filtering, aggregation, and enrichment. It can connect to a wide range of data sources and objectives.

Cons

-

If a node is disconnected from the NiFi cluster while a user is modifying it, the flow.xml becomes invalid.

-

When the primary node transitions, Apache NiFi experiences state persistence issues, which occasionally prohibits processors from retrieving data from sourcing systems.

Who is Apache NiFi best suited for?

Apache NiFi is a fantastic fit for enterprises that need to process and analyze enormous amounts of data in real-time or near real-time.

9. IOBlend

IOblend is an end-to-end enterprise data integration solution with DataOps capability built into its DNA.

Built on top of the kappa architecture and utilizing an enhanced version of Apache Spark™, IOblend allows you to connect to any data source, perform in-memory transforms of streaming and batch data, and sink the results to any destination. There is no need to land your data for staging – perform your ETL in flight, which greatly reduces development and processing times.

Top Features

-

Real-time, production grade, managed Apache Spark™ data pipelines in minutes, using SQL or Python

-

Low code / no code development, significantly accelerating project delivery timescales (10x)

-

Automated data management and governance of data while in-transit - record-level lineage, metadata, schema, eventing, CDC, de-duping, SCD (inc. type II), chained aggregations, MDM, cataloguing, regressions, windowing, partitioning

-

Automatic integration of streaming and batch data via Kappa architecture and managed DataOps

-

Enables robust and cost-effective delivery of both centralised and federated data architectures

-

Low latency, automated, massively parallelized data processing, offering incredible speeds (>10m transactions per sec)

Pricing

IOblend is a licensed, desktop application product (not SaaS).

IOblend offers a free Developer Edition that includes the full suite of features. It can be downloaded from their website.

There are also various Enterprise Editions, with prices starting from $4,199/month for a Standard License (includes standard support and training)

It is best to contact to discuss the requirements.

Pros

-

Uses managed Spark for true streaming and batch processing Simple to use after a short initial training

-

Business rules in SQL and Python – no Spark coding skills needed Virtually no maintenance in prod

-

Monitoring and alerting of data and schema changes (based on defined thresholds)

-

Data pipeline components stored in JSON format for ease of re-use and collaboration

-

Built-in automated data management and technical governance

-

Connects to any data source and sink, using APIs, JDBC, EBS, files

-

Automatically creates and maintains data warehouses and tables

-

Deploys on client’s infrastructure, thus fully utilizing their stringent security protocols

Cons

-

No SaaS source connectors

-

Suggest initial training to get acquainted

-

Currently only works on WinOS (MacOS coming soon)

-

Simplistic UI and basic documentation

Who is IOBlend best suited for?

IOblend is best suited for Operational Analytics cases, where speed, data quality and reliability are paramount. Use cases include streaming live data from factories to the automated forecasting models; flowing data from IoT sensors to real time monitoring apps that make automated decisions based on live inputs and historic stats; moving production grade streaming and batch data to and from cloud data warehouses and lakes; powering data exchanges; and feeding applications with data that requires complex business rules and governance policies.

Fully compliant with DataOps practices for testing, CI/CD and versioning.

10. Fivetran

Fivetran is a cloud-based data integration platform that assists enterprises in automating data transfer from several sources to a central data warehouse or another place.

Fivetran uses a fully managed, zero-maintenance architecture, which means that tasks such as data translation, data quality checks, and data deduplication are performed automatically.

Top Features

-

Complete integration

-

Fast deployment

-

Important notifications are always up to date

-

Fully managed

-

Personalized setup and Raw data access

-

Connect any BI tools

-

Directly mapped schema and Integration monitoring

Pricing

Fivetran's three editions range in price from $1 to $2.

-

The Starter edition is $1 per credit.

-

Each credit costs $1.5 in the regular edition.

-

The Enterprise edition costs $2 per credit.

Pros

-

Managed services strategy

-

Pre-built data analytics schemas

-

Low operating expenses

Cons

-

Limited Data Transformation Support

-

Capabilities for enterprise data management are weak.

Who is Fivetran best suited for?

Best suited for an organization looking to eliminate the need for manual data integration methods and reduce the time and resources required to manage data pipelines would find it highly useful.

11. Dataddo

Dataddo is a data integration ETL software that allows you to transport data between any two cloud services. CRM tools, data warehouses, and dashboarding software are examples of such products and services.

Top Features

-

Managed Data Pipelines

-

200+ Connectors

-

Infinitely Scalable

-

No-Code

-

Supports ETL, ELT, Reverse ETL

-

Free Pricing Tier

Pricing

Dataddo provides four plans.

-

Offers free Sync data with any visualization tools, such as containing three data flows, once a week.

-

Data to Dashboards charges $129 per month for hourly data synchronization to any visualization program.

-

Data Anywhere offers Sync data between any sources and any destinations for $129 a month.

-

allows for Headless Data Integration Build your data products and additional payment mechanisms on top of the unified Dataddo API.

Pros

-

Countless Data Extraction Possibilities

-

A straightforward dashboard

-

The massive quantity of options

Cons

-

The free edition only includes pre-built connectors.

-

The free product version only includes three data flows. A data flow is a connection between a source and a destination in Dataddo's service.

Who is Dataddo best suited for?

Best for a non-technical user that does not need many adjustments and wants to incorporate data from applications into their business intelligence tools.

12. Domo

Domo Business Cloud is a cloud-based SaaS that allows you to build ETL pipelines and combine data from several sources. It acts as an intermediary between your data sources and your data destination (data warehouse), allowing you to extract data from the former and load it into the latter.

Top Features

-

Collaboration & Social BI

-

Analytics Dashboards

-

Ease of use for content consumers

-

Mobile Exploration and Authoring

-

Interactive Visual Exploration

-

Ease of use to deploy and administer

Pricing

-

The base plan is $83.00 per month.

-

A professional plan costs $160.00.

-

A company strategy will set you back $190.00.

Pros

-

Data may be extracted using over 1,000 pre-built connectors.

-

Domo is compatible with on-premises deployments as well as numerous cloud vendors (AWS, GCP, Microsoft, etc.).

-

On the dashboard, ETL pipelines can be established using SQL code or no-code visualization tools.

Cons

-

Because pricing models are tailored for each customer, you will need to contact sales to obtain a quote.

-

Some customers complain that when you start changing the scripts and abandon the pre-built automated extractions, Domo stops performing efficiently.

Who is Domo best suited for?

Ideal for Enterprise users who want Domo to be their primary cloud provider for data integration and extraction.

Related: Top 50 Data Visualization Tools List

13. Jaspersoft ETL/Talend Open Studio

Users can use the open-source data integration platform Jaspersoft ETL to construct, develop, and execute data integration and data transformation processes (formerly known as Talend Open Studio for Data Integration).

Top Features

-

Drag-and-drop process designer

-

Activity monitoring

-

Dashboard analyzes job execution and performance

-

Native connectivity to ERP and CRM applications such as Salesforce, SAP, and SugarCRM

Pricing

Standard plans can range from $100 to $1,250 per month, depending on the size; annual payments are subsidized.

Pros

-

Talend Open Studio lowers developer rates by halving data handling time.

-

Working with large datasets necessitates the dependability and effectiveness of Talend Open Studio. Furthermore, functional mistakes occur far less frequently than they do with manual ETL.

-

Talend Open Studio can interact with a variety of databases, including Microsoft SQL Server, Postgres, MySQL, Teradata, and Greenplum.

Cons

-

A license may be a detriment to firms looking for a free or low-cost data integration and transformation solution.

-

Third-party software dependency: To function, Jaspersoft ETL requires Java and other third-party software components.

Who is Jaspersoft ETL/Talend Open Studio best suited for?

Best suited for Organizations that require a dependable, scalable solution for data integration and transformation. Jaspersoft ETL will assist organizations that require data integration with reporting, data visualization, and business intelligence solutions.

14. CloverDX

CloverDX was one of the first Open-Source ETL Tools. It has a Java-based data integration framework that can transform, map, and deal with data in many formats.

Top Features

-

Data Filtering and Data Analysis

-

Match & Merge

-

Data Quality Control

-

Metadata Management

-

Version Control

-

Access Controls/Permissions

-

Third-Party Integrations

Pricing

CloverDX Designer and CloverDX Server. Each has a 45-day trial period followed by established prices.

Pros

-

Automate difficult operations

-

Before sending data to the destination system, double-check it.

-

Create data quality feedback loops in your operations.

Cons

-

The learning curve is a little steep at first. Just a little bit steep, but not too steep or too steep.

-

Having enough memory for large multi-step problems may become a challenge if the graph is improperly constructed.

Who is CloverDX best suited for?

This software is best suited for all extract, convert, and load jobs and is ideally suited for large data processing.

15. Informatica PowerCenter

Informatica Corporation has made an ETL tool available. This tool allows you to connect to and retrieve data from multiple data sources. According to Informatica, the best implementation ratio is 100%. Instructions and software accessibility are significantly simpler than in prior ETL operations.

Top Features

-

Role-based tools and agile processes

-

Graphical and code-free tools

-

Grid computing

-

Distributed processing

-

High availability, adaptive load balancing, dynamic partitioning, and pushdown optimization.

Pricing

-

Professional Edition - This is a pricey edition that requires a license, with an annual cost of $8000 per user.

-

Personal Edition - You can use it for free and as needed.

Pros

-

It includes intelligence to boost performance.

-

It aids in the update of the Data Architecture.

-

It provides a distributed error-logging system that logs errors.

Cons

-

Workflow and mapping debugging in Informatica PowerCenter are challenging.

-

Lookup transformation consumes more CPU and memory on large tables.

Who is Informatica PowerCenter best suited for?

Best suited for Any business that can benefit from cheaper training costs, and adopting this software makes it simple to hire new employees.

16. Apache Airflow

Apache Airflow is an open-source framework for authoring, scheduling, and monitoring processes programmatically. It is developed in Python and configures workflows as directed acyclic graphs (DAGs) of jobs using a top-down approach. Airflow was created in 2014 by the firm AirBnB and has since become one of the most popular open-source projects in the data engineering area.

Top Features

-

Workflow authoring

-

Open source

-

Rigidity and Scalability

-

Airflow includes a web-based UI for monitoring the status of workflows and tasks, as well as a built-in system for sending alert emails when activities fail.

-

Dynamic DAG(directed acyclic graphs) generation

Pricing

Airflow is free and open-source software distributed under Apache License 2.0.

Pros

-

Python usage results in a huge pool of IT expertise and greater productivity.

-

Everything is written in code, giving you complete control over the logic.

-

Multiple schedulers and task concurrency: scalability horizontally and high performance

-

A plethora of hooks: flexibility and simple integrations

Cons

-

Workflows are not versioned.

-

Inadequate documentation.

-

The difficulty of production setup and maintenance

Who is Apache Airflow best suited for?

It is especially well-suited for use cases with complicated workflows that necessitate a high level of flexibility and control. Companies that have a large amount of data and need to process it reliably and effectively can use it.

17. Qlik Compose

Qlik Compose is a data integration and data management platform by Qlik, a business intelligence, and data visualization software firm. Qlik Compose is intended to assist enterprises in integrating, managing, and governing their data across several systems, databases, and file types.

Top Features

-

Data Streaming in Real Time (CDC)

-

Automation of Agile Data Warehouses

-

Create a Managed Data Lake

Pricing

-

Data Analytics Strategy Qlik Sense Business costs $30 per user per month.

-

Contacts the sales team for Qlik Sense Enterprise SaaS under the Data Analytics category.

-

Contact the sales team for the Qlik Cloud Data Integration category.

Pros

-

Qlik Compose is designed to be simple to use, with a web-based user interface that lets you simply connect to data sources, create and change data models, and manage data.

-

It has a very fast replication speed.

-

It is quite simple to scale up big data-integrated projects, which saves a lot of money.

Cons

-

Because Qlik Compose is not open source, it is not free to use. It is proprietary software, and you must pay for a license.

-

Connectivity is limited

-

It's a bit heavy for a small environment.

Who is Qlik Compose best suited for?

It is ideal for organizations that wish to transmit data safely and efficiently with minimizing operational impact.

18. IBM Infosphere Datastage

IBM InfoSphere DataStage is an IBM data integration and management platform. It is a component of the IBM InfoSphere Information Server Suite and is intended to assist enterprises in extracting, transforming, and loading (ETL) data across various systems, databases, and file formats.

Top Features

-

A high-performance parallel framework that can be deployed on-premises or in the cloud. Allows for the quick and easy deployment of integration run time on your preferred cloud environment.

-

Enterprise connectivity and expanded metadata management.

-

By transparently handling endpoint individuality, it yields enormous productivity improvements over coding.

Pricing

-

The Small On IBM Cloud Managed package costs $19,000 per month.

-

The medium IBM Cloud Managed plan costs $35400 per month.

-

The Large plan on IBM Cloud Managed starts at $39,400 per month.

-

For Enterprise Edition, please contact the sales team.

Pros

-

Workload and business rules implementation

-

Uses design automation and prebuilt patterns to provide a quick development cycle.

-

Integration of real-time data and an easy-to-use platform

Cons

-

Integration of DataStage with cloud

-

Database management with DataStage

-

Manipulation of deep functions is difficult.

-

Cloud services make it difficult to manipulate tools.

-

The hierarchical phases for parsing and building XMLs and JSONs might be improved.

Who is IBM Infosphere Datastage best suited for?

It is particularly well-suited for enterprises that need to handle data in parallel processing and have a budget for commercial solutions. It makes it easier for businesses to exploit new data sources by including JSON support and a new JDBC connection.

19. SAP BusinessObjects Data Services

SAP BusinessObjects Data Services (BODS) is the company's data integration and data management platform. BODS is an SAP BusinessObjects BI (Business Intelligence) platform component that connects with other SAP products such as SAP HANA and SAP BW (Business Warehouse).

Top Features

-

SAP BODS is a platform that combines industry-leading data quality and integration.

-

It supports multi-users.

-

It includes extensive administrative capabilities as well as a reporting tool.

-

It supports parallel transformations with great performance.

-

With a web-services-based application, SAP BODS is extremely adaptable.

-

It supports scripting languages with extensive function sets.

Pricing

SAP BusinessObjects Data Service does not have a free version. SAP BusinessObjects Data Service paid version starts at $35,000.00/year.

Pros

-

Excellent scalability

-

With a drag-and-drop interface, analysts or data engineers can begin utilizing this tool without any specific coding expertise.

-

The tool also allows for versatility in data creation by allowing for numerous ways to load data to SAP, such as BAPIs, IDOCS, and Batch input.

Cons

-

High buying price

-

Data Services are geared toward development teams rather than business users.

-

The debugging functionality of Data Services is not as sophisticated as other tools

Who is SAP BusinessObjects Data Services best suited for?

SAP BusinessObjects Data Services is best suited for enterprises already invested in the SAP ecosystem, particularly those employing SAP HANA and SAP BW. It is also appropriate for businesses that require the integration, management, and governance of huge amounts of data and have a budget for commercial solutions.

20. Hevo Data

Hevo Data is a data management and integration tool designed to help businesses integrate data from various sources. Hevo Data is a cloud-based platform, customers do not need to worry about installing, configuring, or maintaining the underlying infrastructure.

Hevo allows you to copy data in near real-time from over 150 sources, including Snowflake, BigQuery, Redshift, Databricks, and Firebolt.

Top Features

-

Automated Data Pipeline

-

100+ Data Sources Supported

-

Real-time Data Replication

-

No-code Data Transformation

-

Data Quality and Governance

-

Multi-cloud Support

-

Scalability

-

24/7 Support

-

Dashboard and Reports

-

Data Modeling

-

Retry Mechanism

Pricing

-

Up to a million occurrences are free, but only from more than 50 data sources.

-

Beginner: $239 per month.

-

Individual quote for business

Pros

-

Because Hevo Data is a fully managed, cloud-based platform, users don't have to worry about installing, configuring, or maintaining the underlying infrastructure.

-

The user-friendly interface of Hevo Data allows users to simply build and manage data integration jobs.

-

Hevo Data easily integrates with a wide range of tools and platforms, including reporting, data visualization, and business intelligence applications.

-

Hevo also allows you to monitor your workflow to address issues before they fully halt it.

Cons

-

Because Hevo Data is a commercial software application, it requires a license to use.

-

Hevo Data supports a wide range of data sources, albeit not all of them are supported or not to the same extent.

-

Excessive CPU Utilization

Who is Hevo Data best suited for?

Hevo Data is a powerful and versatile data management and integration solution perfect for enterprises looking for a scalable, fully managed, and user-friendly platform for moving and combining data. Hevo is ideal for data teams looking for a no-code platform with Python programming freedom and well-known data sources.

Source: https://hevodata.com/

21. Enlighten

Enlighten is a product suite for automated data management. Its users may accurately and efficiently determine and comprehend the genuine picture of their organization's data.

Top Features

-

Data profiling, discovering, and monitoring

-

Data matching

-

Data Enrichment

-

Web services and API integration

-

Data cleansing and Data integration

-

Address validation and geocoding

-

Real-time data quality

Pricing

Pricing information is not publicly available. To obtain a price quote, please contact the sales staff.

Pros

-

Lower expenses

-

Created a true customer view

-

Improves operational efficiency

Cons

-

For users who are unfamiliar with the platform, there may be a high learning curve.

-

Because the platform may be unable to manage missing, duplicate, or erroneous data, data quality tests may be required before importing the data.

Who is Enlighten best suited for?

It is most suited for clients who require accurate and efficient data from the start, as well as the ability to retain it throughout time. Enlighten features an end-to-end data quality suite that provides organizations of all sizes with configurable and comprehensive solutions.

22. Azure Data Factory

Microsoft Azure Data Factory (ADF) is a cloud-based data integration and data management tool. It is a component of the Azure platform that is intended to assist enterprises in extracting, transforming, and loading (ETL) data across various systems, databases, and file formats. ADF helps you to build, schedule, and manage data pipelines that move and convert data between different data stores.

Top Features

-

ADF provides a graphical interface for designing, scheduling, and managing data pipelines, which allows you to move and transform data between data storage.

-

ADF is created in the cloud and uses Azure services such as Azure Data Lake Storage, Azure SQL Database, and Azure Data Warehouse.

-

Customer Pipeline

-

Monitoring and Debugging

-

Orchestrator

Pricing

-

Factory activities in Azure Data The cost of read/write starts at $0.50 for every 50,000 modified/referenced entities.

-

Monitoring begins at $0.25 per 50,000 run records obtained.

Pros

-

ADF is built to handle massive volumes of data and can extend horizontally by adding more nodes to the cluster.

-

The trigger scheduling options are adequate.

-

The UI is simple to use and can get VTL code without the need for advanced coding knowledge.

Cons

-

When an error occurs, there is no built-in pipeline exit activity.

-

Azure Data Factory consumes a lot of resources and has problems with parallel operations.

-

The pricing approach should be more transparent and accessible via the internet.

Who is Azure Data Factory best suited for?

Azure Data Factory is best suited for enterprises that want to combine and manage data from a variety of data sources and systems while also using the Azure ecosystem. It is also appropriate for enterprises that require a cloud-based, scalable data integration solution with a focus on data transportation and data transformation capabilities.

23. ETLWorks

Etlworks is a modern, cloud-first, any-to-any data integration platform that grows with your company. They use data to help people and organizations solve their most difficult problems.

Top Features

-

Cloud-based solution

-

Enterprise Service Bus

-

Change Replication

-

Support for online data warehouse

-

Automatic and manual mapping

Pricing

Starts from $250 per month.

Pros

-

Implementation simplicity.

-

Excellent data warehouse tool!

-

ETL works integrator is a fantastic tool for merging operational efficiencies and data mapping across the company!

Cons

-

There are no debugging tools available.

-

ETLworks may not effortlessly interact with other systems or technologies that an organization already employs.

Who is ETLWorks best suited for?

It is an excellent tool for merging operational efficiencies and data mapping across the organization!

24. Microsoft SQL Server Integration Services (SSIS)

SSIS is a platform for developing high-performance data integration and workflow solutions in Microsoft SQL Server. It is a component of the Microsoft SQL Server database program that is used to execute data integration and transformation activities.

Top Features

-

Data source connections built-in

-

Tasks and transformations are built in.

-

Source and destination ODBC

-

Connectors and tasks for Azure data sources

-

Tasks and Hadoop/HDFS connections

-

Tools for basic data processing

Pricing

SSIS is part of SQL Server, which comes in a variety of editions ranging from free (Express and Developer editions) to $14,256 per core (Enterprise),

Pros

-

It is widely used, well-documented, and has a sizable user base.

-

It is scalable, can handle massive amounts of data, and can scale up to petabytes of data.

-

Destination for dimension and partition processing

-

Transformations for term extraction and term lookup

Cons

-

It necessitates a separate installation and configuration, adding to the total complexity of the data integration procedure.

-

It does not support cloud storage natively, such as S3, Azure storage, and others, and requires additional connectors to integrate with them.

-

It is not suitable for complicated real-time data integration applications.

-

If you have many packages that need to run in parallel, you have a problem. SSIS consumes a lot of memory and interferes with SQL.

Who is Microsoft SQL Server Integration Services (SSIS) best suited for?

It's great for solving complicated business problems including uploading or downloading files, sending e-mail messages in reaction to events, updating data warehouses, cleaning and mining data, and managing SQL Server objects and data.

25. AWS Data Pipeline

AWS Data Pipeline is a web service that allows you to process and transport data between data stores. It enables you to create data-driven workflows that execute actions on a scheduled, repeated, or on-demand basis.

Top Features

-

Scheduling and automation of data transit and processing tasks

-

Amazon S3, Amazon RDS, Amazon DynamoDB, and additional data sources and destinations are supported.

-

AWS Glue, Apache Hive, and Pig scripts are used to transform data.

-

Data movement and processing across AWS regions and accounts

-

Other AWS services, such as AWS Step Functions, Amazon SNS, and Amazon CloudWatch, are integrated.

Pricing

-

Activities or preconditions running on AWS start at $1.00 per month for high frequency and $0.60 per month for low frequency.

-

On-premise activities or preconditions begin at $2.50 per month for high frequency and $1.50 per month for low frequency.

Pros

-

It is a completely managed service, which means you don't have to bother about infrastructure provisioning or management.

-

It supports many data sources and destinations, as well as a variety of transformations.

-

It enables data movement and processing between AWS regions and accounts, which can aid with data sovereignty and compliance.

-

It's designed to function in tandem with other AWS services, making it simple to create data integration workflows with many steps.

Cons

-

It doesn't have as many data sources and destinations as other data integration solutions.

-

Complex service if you're unfamiliar with AWS services

-

The pipeline definition language is not as user-friendly as those used by other data integration systems.

Who is AWS Data Pipeline best suited for?

It is best suited for businesses that require fault-tolerant, repeatable, and highly available complex data processing workloads. Scenarios involving data integration necessitate data transfer and processing across regions or accounts.

26. Skyvia

Skyvia is a cloud-based data integration and management platform that assists enterprises in connecting and managing data across cloud and on-premise apps and databases.

Top Features

-

Salesforce, Dynamics, Zoho, SQL Server, MySQL, Oracle, and more data sources and destinations are supported.

-

Real-time data integration requires data replication and synchronization.

-

Capabilities for data backup and restoration

-

Features for data quality and validation

-

Direct data connectivity between apps

-

Backup automation scheduling settings

-

A wizard to ease local database connections

Pricing

The most basic package starts at $15 per month. The standard plan is $79 per month, while the Professional plan costs $399 per month. Contact customer service for the Enterprise plan.

Pros

-

It is a fully managed, cloud-based service, so you don't have to bother about infrastructure provisioning or management.

-

It provides a variety of data integration capabilities such as replication, synchronization, and data validation to help assure the quality of your data.

-

Skyvia excels at bidirectional data integration from/to numerous sources on a scheduled basis.

-

The mapping is done automatically, which saves a significant amount of time.

-

There are numerous integration features.

Cons

-

It doesn't have as many data sources and destinations as other data integration solutions.

-

The synchronization process could be made a little faster.

-

There is no real-time support.

-

It is not designed for real-time data integration in complex or high-volume scenarios.

Who is Skyvia best suited for?

It's especially valuable for businesses that need to consolidate data from numerous cloud-based systems and sources and make it available for reporting, analytics, and other mission-critical applications.

27. Toolsverse

Toolsverse LLC is a privately held software firm headquartered in Pittsburgh, Pennsylvania. The company focuses on unique data integration solutions. Its core products include platform-independent ETL tools, data integration, and database creation. Using a drag-and-drop visual designer and scripting languages such as JavaScript, users can create complex data integration and ETL scenarios in the Data Explorer.

Top Features

-

Embeddable, open source, and free

-

Fast and scalable

-

Uses target database features to do transformations and loads

-

Manual and automatic data mapping

-

Data streaming

-

Bulk data loads

Pricing

The personal edition is free, whereas ETL Server costs $2000.

Pros

-

SQL, JavaScript, and regex are used to improve data quality.

-

Easy to Start

-

No coding unless you want to

-

Customizable

Cons

-

When connecting Toolsverse ETL with other systems, some users may encounter challenges that are time-consuming and difficult to overcome.

-

Toolsverse ETL may not contain connectors for all of the data sources that a company may want to integrate, requiring additional development work to accommodate them.

Who is Toolsverse best suited for?

It can be a viable alternative for businesses looking for a low-cost solution that does not necessitate considerable development work.

28. IRI Voracity

IRI Voracity is a data management and integration platform created by IRI, a software company specializing in data management and analytics. It is intended to assist enterprises in integrating, managing, and analyzing huge amounts of data from many sources, such as databases, files, and apps.

Top Features

-

Data transformation and Data segmentation

-

Job Design

-

Embedded reporting

-

BIRT, DataDog, KNIME, and Splunk integrations

-

JCL data redefinition

-

CoSort (SortCL) 4GL DDL/DML

Pricing

It offers a free trial period. You can license the platform as an operating expense (OpEx), or as a capital investment for permanent use (CapEx). Contact the sales team for a quote.

Pros

-

Consolidates products simplify metadata and save I/Os

-

Leave legacy sort software faster

-

Faster, free visual BI in Eclipse

-

Automated, custom table analysis

-

It includes strong data governance and security features.

Cons

-

For beginners, the platform may be difficult to utilize.

-

The solution has a high cost, and it might be an expensive investment for small firms.

-

More sophisticated activities may necessitate the use of specialized technical skills.

Who is IRI Voracity best suited for?

IRI Veracity enables enterprises to locate, understand, and regulate data across the enterprise, while also improving data accuracy and trustworthiness. IRI Voracity is typically used by major corporate firms in a variety of industries including healthcare, banking, retail, and manufacturing.

29. Dextrus

Dextrus is a complete and comprehensive no-code high-performance solution that aids in the creation, deployment, and management of assets. Data ingestion, streaming, cleansing, transformation, analyzing, wrangling, and machine learning modeling is all supported.

Top Features

-

Create batch and real-time streaming data pipelines in minutes, then automate and operationalize them using the built-in approval and version control system.

-

Create and maintain a simple cloud Data lake for cold and warm data reporting and analytics.

-

Using visualizations and dashboards, you may analyze and acquire insights into your data.

-

Prepare datasets for sophisticated analytics by wrangling them.

-

Construct and deploy machine learning models for exploratory data analysis (EDA) and prediction.

Pricing

Offers a 15-day free trial. To obtain a quote, please contact the Sales team.

Pros

-

Quick Insight on Dataset

-

Query-based and Log-based CDC

-

Anomaly detection

-

Push-down Optimization

-

Data preparation at ease

-

Analytics all the way

Cons

-

It may not be appropriate for firms that do not have a large amount of data to examine.

-

There is sometimes a lag and it hangs.

Who is Dextrus best suited for?

Dextrus is best suited for businesses that want a complete and comprehensive no-code high-performance solution for asset development, deployment, and administration.

30. Astera Centerprise

Astera Centerprise is an Astera Software data integration and management platform. It is intended to assist enterprises in integrating, managing, and analyzing huge amounts of data from several sources, such as databases, files, and apps.

Top Features

-

Bulk/Batch Data Movement

-

Data Federation/Virtualization

-

Message-Oriented Movement

-

Data Replication & Synchronization

Pricing

Pricing information is not publicly available. Please contact sales for further information.

Pros

-

Simple user interface/GUI for interacting with the application

-

Very adaptable and scalable

-

Excellent Customer Service

-

Data transformation between data sources.

-

The ability to distribute files to a variety of destinations.

Cons

-

Ability to direct logging to a tool other than the built-in logger.

-

A workflow can take hours to process with a huge dataset. It is difficult to quickly add a row index without doing additional procedures.

-

The performance is a little lacking.

Who is Astera Centerprise best suited for?

It is a versatile solution that can be tailored to a company's requirements, and it has a plethora of features and functionalities that can be used to increase data governance, data quality, and data security.

31. Improvado

Improvado is a data integration and analytics platform that enables companies to connect, organize, and analyze data from many sources. It's intended to assist businesses to enhance their marketing and sales performance by giving a unified picture of their data.

Top Features

-

Allows data from many sources, such as advertising platforms, marketing automation systems, and CRM software, to be integrated.

-

Dashboards and reports

-

Support for different cloud environments

-

AI-based insights

-

About 80 SaaS sources

Pricing

All new users receive a 14-day trial period. Standard plans range in price from $100 to $1,250 per month, with reductions for paying annually. Enterprise plans, which are priced individually for larger enterprises and mission-critical use cases, might include unique features, data amounts, and service levels.

Pros

-

Deep and granular marketing integrations allow you to examine data at the keyword or ad level.

-

Ability to normalize exported metrics, build custom metrics, and map data across platforms

-

It enables users to deduplicate and enrich data from many sources to meet the diverse needs of a client.

-

Excellent for advertising organizations handling campaigns for several clients.

-

View ad creatives directly from your dashboard --- This function is quite useful, and I've never seen it offered anywhere else!

-

90% less time is spent on manual reporting.

-

There is no need for developers.

-

Completely customizable, with over 300 connectors available and more integrations available upon request

Cons

-

It may not be appropriate for firms that do not have a large amount of data to examine.

-

The platform may be more expensive than other options.

-

Some of the more detailed features might be confusing, but assistance is excellent at guiding consumers through them.

-

There may be some initial back and forth with your customer support representative to have your dashboards and reports visualized exactly the way you want.

Who is Improvado best suited for?

Improvado is best suited for firms that need to improve their marketing and sales performance and have a large amount of data to analyze. Organizations in a range of industries, including e-commerce, healthcare, banking, and retail, can use it.

32. Onehouse

Onehouse offers the original lakehouse as a service with quick setup and ingest, incremental ETL, data processing, and data management.

Top Features

-

Onehouse offers industry-leading fast data ingest into the lakehouse to provide fresher data at lower cost for real-time and batch pipelines

-

Onehouse uses open standards at every step, avoiding vendor lock-in

-

Onehouse is easy to use with a no-code ETL UI in addition to an API for authoring complex pipelines

-

Onehouse automates data quality checks and data quarantine, and provides full visibility with pre-built dashboards

-

Onehouse keeps you in control of your data, reducing dependency on competing, proprietary platforms

Pricing

Onehouse charges credits based on compute usage to provide flexible pricing for any workload. Onehouse offers a free trial for new customers.

Pros

-

Real-time data ingestion into the lakehouse with latency of seconds to minutes (not hours to days)

-

ETL is fully incremental so you only write data that has changed Onehouse handles the full ETL lifecycle from ingestion to transformations to data management

-

Data ingested by Onehouse lives in your cloud account in any open table format, so the data is yours and you can run queries anywhere

Cons

-

Onehouse only offers ETL for data lakehouses, not data warehouses

-

No tail connectors; users must stage tail data in a supported source like S3 or Kafka

Who is Onehouse best suited for?

Onehouse is best suited for teams seeking to improve data freshness and reduce data warehouse costs without managing the complexities of a DIY data lakehouse.

Source: https://www.onehouse.ai/

33. Sybase ETL

Sybase is a market leader in data integration. The Sybase ETL tool is designed to load data from various data sources, transform it into data sets, and then load it into the data warehouse.

Sub-components of Sybase ETL include Sybase ETL Server and Sybase ETL Development.

Top Features

-

Simple graphical user interface for creating data integration jobs.

-

It is simple to understand, and no additional training is required.

-

The dashboard provides a fast overview of where the processes are at.

-

Real-time reporting and improved decision-making.

-

It only works with the Windows operating system.

-

It reduces the cost, time, and human effort required for the data integration and extraction process.

Pricing

There is no pricing information available. For price information, please contact the sales team.

Pros

-

It can extract data from a variety of sources, including Sybase IQ, Sybase ASE, Oracle, Microsoft Access, Microsoft SQL Server, and many others.

-

It enables you to load data into a target database in bulk or via delete, update, and insert statements.

-

It can cleanse, integrate, convert, and split data streams. This can then be used to enter, update, or delete information from a data target.

Cons

-

Gaps in many aspects of data management

-

The platform may be more expensive than other options.

-

More sophisticated activities may necessitate the use of specialized technical skills.

Who is Sybase ETL best suited for?

It's worth noting that Sybase ETL is best suited for enterprises with a significant volume of data that require regular extraction, transformation, and loading of data. It is also ideal for companies with a technical team capable of deploying and maintaining the solution.

34. Cognos Data Manager

ETL operations and high-performance business intelligence are carried out using IBM Cognos Data Manager. It offers the unique characteristic of multilingual support, which allows it to construct a global data integration platform. IBM Cognos Data Manager automates business operations and is available for Windows, UNIX, and Linux.

Top Features

-

A graphical user interface is used to create data integration and transformation jobs.

-

Support for numerous data sources, including relational databases, flat files, and cloud-based data sources like Salesforce and Google Analytics.

-

Data quality and data profiling features are built in to assist in identifying and correcting data issues.

-

The capacity to plan and execute data integration and transformation tasks

-

Support for incremental data loading, allowing organizations to update their data warehouse with new or altered data without reloading the full dataset.

Pricing

There is no pricing information available.

Pros

-

The graphical user interface allows users to create data integration and transformation operations without having to code.

-

Because Cognos Data Manager supports a wide range of data sources, businesses can simply integrate data from many sources into their data warehouses.

-

Businesses can use built-in data quality and data profiling tools to discover and correct data issues.

Cons

-

Businesses must rely on the vendor for updates and support.

-

Firms must have the IBM Cognos BI platform to use it, hence the cost may be extremely significant.

-

Some users may find the interface confusing, especially those who are new to data integration and transformation.

Who is Cognos Data Manager best suited for?

Cognos Data Manager is ideal for enterprises that require the integration and transformation of data from numerous sources for analysis in a data warehouse or data mart, as well as built-in data quality and data profiling features. It is frequently used in medium to large companies.

35. Matillion

Matillion is a cloud-based data integration and transformation platform that assists enterprises in extracting data from several sources, transforming it, and loading it into data warehouses.

Top Features

-

A user-friendly interface for creating data integration and transformation pipelines.

-

Support for numerous data sources, including relational databases, flat files, and cloud-based data sources like Amazon S3 and Google Sheets.

-

Data transformation tools built in, such as filtering, pivoting and merging data

-

The capacity to plan and execute data integration and transformation tasks

-

Monitoring and logging features are included to aid with troubleshooting and auditing.

Pricing

-

The basic plan costs $2.00 per credit.

-

The advanced plan starts at $2.50 per credit.

-

Enterprise plans begin at $2.70 per credit.

Pros

-

Users may easily design data integration and transformation pipelines using the user-friendly drag-and-drop interface, eliminating the need for coding.

-

Because Matillion supports a wide variety of data sources, businesses may quickly combine data from many sources into their data warehouses.

-

Businesses can use built-in data transformation tools to clean and prepare data for analysis.

Cons

-

Because Matillion is proprietary software, organizations must rely on the vendor for updates and support.

-

Some users may find the interface confusing, especially those who are new to data integration and transformation.

-

The platform may lack the depth of certain more sophisticated ETL systems.

Who is Matillion best suited for?

Matillion is ideal for enterprises that require the integration and transformation of data from numerous sources for analysis in a data warehouse. It's an excellent choice for small and medium-sized organizations, startups, and large enterprises looking to harness the power of the cloud without investing in costly infrastructure.

36. Oracle Warehouse Builder

Oracle Warehouse Builder (OWB) is a data integration and data modeling tool used on the Oracle Database platform to create and manage data warehouses and data marts. It provides a graphical environment for creating and constructing tasks related to data integration, data quality, and data modeling.

Top Features

-

Data source connection

-

Data transformations

-

Data modeling

-

Data warehousing

-

It can be used in conjunction with other Oracle technologies such as Oracle BI and Oracle Data Integrator.

Pricing

It is included with the most recent version of the Oracle database. You must pay an additional fee for support and software license updates.

Pros

-

Oracle Warehouse Builder is part of the Oracle Database ecosystem, which means it is strongly integrated with it and may benefit from its features and capabilities.

-

Allows for the creation and deployment of enterprise data warehouses.

-

Allows for the creation and deployment of data marts and e-business.

Cons

-

Enterprises must rely on the vendor for updates and support.

-

Firms must have the Oracle Database to use it, which can be rather pricey.

-

There isn't any good learning material available.

-

Poor mapping transformation automation

Who is Oracle Warehouse Builder best suited for?

It is especially well suited for firms who already use the Oracle Database and want to leverage its built-in data warehousing features. It is primarily utilized by medium to big companies because it is part of the Oracle ecosystem.

37. SAP - BusinessObjects Data Integrator

SAP BusinessObjects Data Integrator (BODI) is a data integration tool included with the SAP BusinessObjects BI platform. It enables enterprises to extract, transform, and load data into a data warehouse or data mart from a variety of sources, including relational databases and flat files.

Top Features

-

It aids in the integration and loading of data in the analytical environment.

-

The Data Integrator web administrator is a web-based interface for managing multiple repositories, metadata, web services, and task servers.

-

It aids in the scheduling, execution, and monitoring of batch jobs.

-

It is compatible with Windows, Sun Solaris, AIX, and Linux.

-

It is compatible with other SAP BusinessObjects technologies like SAP BusinessObjects Data Services and SAP Business Warehouse.

Pricing

The plan starts at EUR 35000.

Pros

-

Batch jobs can be executed, scheduled, and monitored using SAP BusinessObjects Data Integrator.

-

You may also use this tool to create any form of Data Mart or Data Warehouse.

-

It supports the platforms Sun Solaris, Windows, AIX, and Linux.

-

Integration with other SAP BusinessObjects products helps improve data integration functionality and efficiency.

Cons

-

Writing customized components is a difficult task.

-

BODI should have some data quality integration in addition to ETL.

-

Code documentation is ready, and component commenting is integrated.

Who is SAP - BusinessObjects Data Integrator best suited for?

It is best suited for businesses that need to extract data from any source, process, integrate, and format that data, and then save it in any target database.

38. Oracle Data Integrator

Oracle Data Integrator (ODI) is an Oracle Corporation-developed and owned data integration tool. It is a component of Oracle's data integration platform, which also includes Oracle GoldenGate and Oracle Data Quality. ODI is intended to help developers create data integration solutions for a variety of use cases, including data warehousing, data transfer, and real-time data integration.

Top Features

-

There is training, support, and professional services available.

-

Proprietary Licensing

-

Design And Development Environment

-

Integration with databases, Hadoop, ERPs, CRMs, B2B systems, flat files, XML, JSON, LDAP, JDBC, and ODBC out of the box. Java must be installed as well.

Pricing

A single processor deployment costs around $36,400.

Pros

-

Provides a wide range of functions for performing difficult data integration jobs.

-

Provides a scalable, high-performance solution

-

Native big data Support

-

Leading Performance and Improved Productivity

Cons

-

When compared to its competitors, the price is slightly higher.

-

There is sometimes a lag and it hangs.

-

Real-time data integration is not possible.

-

Data ingestion from a wide range of data sources may be tough to accomplish.

Who is Oracle Data Integrator best suited for?

ODI is ideal for enterprises with high-volume and high-complexity data integration requirements, particularly those involving several data sources and target systems. Also useful for businesses trying to integrate data amongst Oracle products.

39. Ab Initio

Ab Initio is a proprietary software platform used to create and manage data integration initiatives. It includes a full range of tools for designing, creating, testing, and deploying data integration solutions. Ab Initio is well-known for its high-performance parallel processing and ability to handle massive amounts of data.

Top Features

-

Graphical Development

-

Batch & Real-Time Processing

-

Elastic Scaling

-

Web Services & Microservices

-

Data Formats & Connectors

-

Metadata-Driven Applications

Pricing

This product or service's pricing has not been supplied by Ab Initio.

Pros

-

Scalability and performance

-

A large number of connectors and a comprehensive set of built-in functionality

-

Components and libraries that can be reused

-

Batch and real-time processing are also supported.

Cons

-

Specific issue solutions and resolutions are difficult to come by.

-

Skilled resources are in short supply.

-

A few components must be configured with the MAX CORE value, which necessitates computations.

-

A locked and proprietary platform with limited modification.

Who is Ab Initio best suited for?

Ab Initio is ideal for enterprises with high-volume and high-complexity data integration requirements, particularly those involving massive amounts of data and requiring high-performance data processing. Also suitable for businesses looking for a complete platform for planning, developing, and deploying data integration solutions.

40. IBM -- Infosphere Information Server

Infosphere Information Server is an IBM product that was released in 2008. It is a market leader in data integration platforms that assist businesses in understanding and delivering important values. It is primarily intended for Big Data firms and large-scale corporations.

Top Features

-

It is a tool that has been commercially licensed.

-

The Infosphere Information Server is a comprehensive data integration platform.

-

It is compatible with Oracle, IBM DB2, and the Hadoop System.

-

It works with SAP through numerous plug-ins.

-

It contributes to the enhancement of data governance strategy.

-

It also aids in the automation of company procedures for cost-cutting purposes.

-

Data integration across different systems in real-time for all data types.

-

It is simple to combine with an existing IBM-licensed tool.

Pricing

-

The Small On IBM Cloud Managed package costs $19,000 per month.

-

The medium IBM Cloud Managed plan costs $35400 per month.

-

The Large plan on IBM Cloud Managed starts at $39,400 per month.

-

For Enterprise Edition, please contact the sales team.

Pros

-

It's pretty impressive when it comes to data encryption.

-

Excellent workflow management effectiveness.

-

Excellent at data configuration, tuning, and repair.

Cons

-

Inadequate web development environment.

-

The distribution of metadata in Jobs is fairly complicated.

-

The ability to create jobs in Parallel and/or Server Engines is perplexing.

Who is IBM -- Infosphere Information Server best suited for?

It is best suited for an organization that wants assistance in extracting more value from the complex, heterogeneous information scattered across its systems.

41. Logstash

This ETL tool is a real-time data pipeline that can take data, logs, and events from sources other than Elasticsearch, process them, and then store everything in an Elasticsearch data warehouse.

Top Features

-

Transformation of data.

-

Filtering of data.

-

Data analysis.

-

Managed File Transfers Adhoc file transfer solution utilizing FTP, HTTP, and other protocols.

-

Data Extraction Aids in the extraction of data from various databases and files.

-

Integration of APIs

-

It makes it simple to integrate logic or data with other software applications.

Pricing

Logstash is offered as a free download and as a subscription with other Elastic Stack products starting at $16 per month.

Pros

-

Logstash is open-source and was created using open-source tools.

-

Logstash is incredibly easy to set up and allows us to retain configuration files in plaintext format.

-

The plugin ecosystem supports modular extensions.

Cons

-

If you are deploying Logstash on commodity hardware, it is a HOG.

-