🚀 Portable does more than just ELT. Explore Our AI Orchestration Capabilities

Real-Time Data Integration - Tools | Benefits | Use Cases

What is real-time data integration?

Real-time data integration is the process of transferring information from one system to another in a matter of milliseconds instead of minutes or hours.

For data teams that need near real-time data (synced as frequently as every 15 minutes), Portable offers a simple and effective way of getting the data synced into your data warehouse with over 300+ no-code ETL connectors that move data fast.

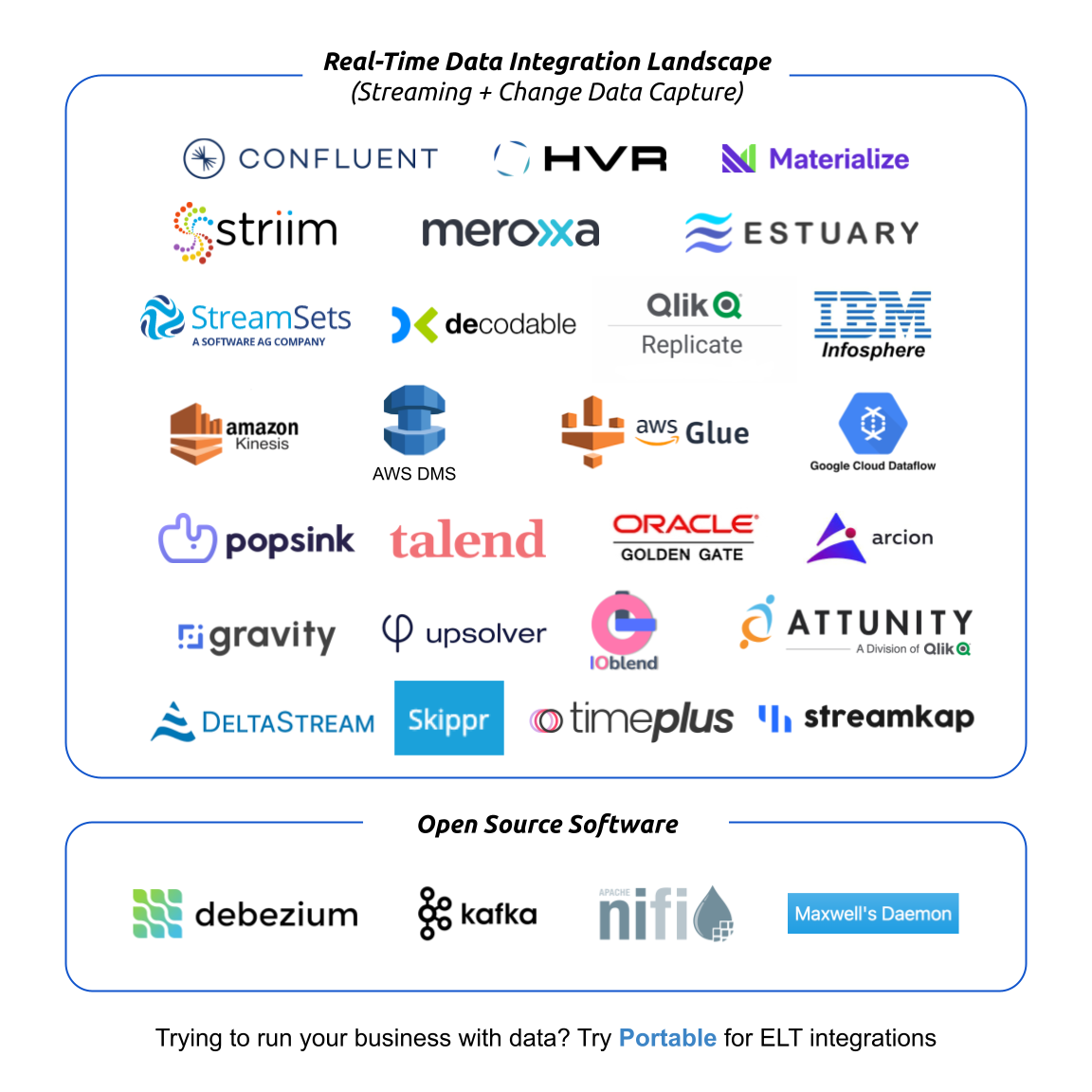

Real-time data integration tools list (2023 Update)

Here are the real-time data integration tools you should evaluate:

- Apache Nifi (Open Source)

- Arcion

- Attunity

- AWS DMS

- AWS Glue

- Amazon Kinesis

- Confluent

- Debezium (Open Source)

- Decodable

- DeltaStream

- Google Cloud Dataflow

- Gravity Data

- HVR

- IBM Infosphere

- IOblend

- Kafka (Open Source)

- Materialize

- Maxwell's Daemon (Open Source)

- Meroxa

- Oracle Golden Gate

- Popsink

- Qlik Replicate

- RisingWave

- Skippr

- StreamSets

- Streamkap

- Striim

- Talend

- Timeplus

- Upsolver

Do data teams need real-time data integration?

Most business intelligence teams do not need real-time data integration for common analytics or automation use cases. They simply need processing of data that is near real-time (measured in minutes instead of milliseconds). If your goal is to analyze data from your CRM system to reduce churn or to create a single customer view, you most likely don't need a real-time pipeline to do so.

Even though that's the case, most data teams are exposed to real-time data integration when they use a change data capture (CDC) solution to mirror changes from their relational database (PostgreSQL, MySQL, SQL Server) into their data warehouse for analytics.

Just like any other component of your data management stack, it's always important to consider the value you can create for business users along with the scalability of the technical data platform you are creating.

You need to ask yourself: How does my data platform improve data analytics? Am I able to automate previously manual workloads? Can I sell a new data product to clients if I leverage a real-time pipeline?

Real-time processing is powerful, but you need to make sure it creates value for your specific business.

What are the benefits of real-time integration?

Real-time data pipelines provide extremely low latency and high throughput data processing. The benefits include:

-

Disparate systems remain in sync (enhancing data quality)

-

Data is processed only once (as changes instead of snapshots) which can save money

-

Data can be aggregated before it is loaded into the destination, reducing storage and compute costs downstream

In addition to the benefits above, real-time data pipelines are an effective approach to data ingestion when you are working with big data sets.

When you have data from different sources (internal applications, data providers, partners) that you need to process and keep in sync, a streaming pipeline can offer a strong backbone for real-time analytics, data visualization, or machine learning at scale.

These benefits are available because of the unique technical architecture of real-time pipelines.

How does real-time data integration work?

Real-time data processing involves 5 steps:

-

The upstream system exposes change logs

-

The real-time integration platform captures the change logs

-

The data integration platform processes the data as it arrives (i.e. stream processing)

-

The integration solution pushes the results of processing into a destination system

-

A downstream action takes place

The differences between batch and streaming: Batch processing involves syncing data on a cadence whereas a streaming data pipeline incrementally applies data transformations as new information arrives.

What are the use cases for real-time data?

Real-time data processing is valuable for:

-

Product interfaces that require up-to-the-second data (like displaying ATM balance)

-

High-frequency trading

-

Real-time customer journey personalization

-

Preventing fraud

-

Managing eCommerce inventory (to optimize commerce flows)

-

Understanding supply chain bottlenecks

-

Freight tracking

To unlock these use cases, it can be challenging to stand up the infrastructure, architect a technology stack, and keep everything running in a cost effective manner. As a result, most teams either 1) engage a data consultant to accelerate the process, or 2) hire a senior developer in-house to manage the technology.

Which use cases do not require real-time data?

Core analytics use cases (creating a unified view of the customer journey, analyzing your email subscriber engagement, powering strategic decision-making) do not require real-time data processing.

While it can still make sense to leverage a real-time integration platform in select situations, it can lead to longer setup workflows and more costly cloud bills depending on the use case.

In these types of scenarios, a no-code ETL pipeline can be a simple and effective approach.

You simply connect to the source systems you care about (CRM, ERP, IoT devices, Microsoft Excel, etc.) and load the data sets into your warehouse or data lake.

What are the most common destinations for a real-time pipeline?

Most data pipelines are used for either: 1) analytics, 2) process automation, or 3) product development. The most common destinations are:

- Transactional databases (PostgreSQL, MySQL, etc.)

- File stores (Google Cloud Storage, Amazon S3, Azure Blob Storage, etc.)

- Data warehouses (Snowflake, BigQuery, AWS Redshift, etc.)

- Data lakes (Databricks, Spark, Parquet, etc.)

- APIs exposed by SaaS applications (Mailchimp, Klaviyo, Chargebee, etc.)

For near real-time data integration, what's the simplest solution?

If you need near real-time data integrations, you can get started with ETL in minutes using Portable's cloud-based solution.

- Sign Up for a free account (with no credit card required)

- Connect a source or request a custom connector

- Authenticate with your source credentials

- Select a destination and configure your credentials

- Create a flow connecting your data source to your analytics environment

- Initiate the flow to replicate data from your source to your warehouse

- Use the dropdown menu to set a cadence for your data flow